Introduction

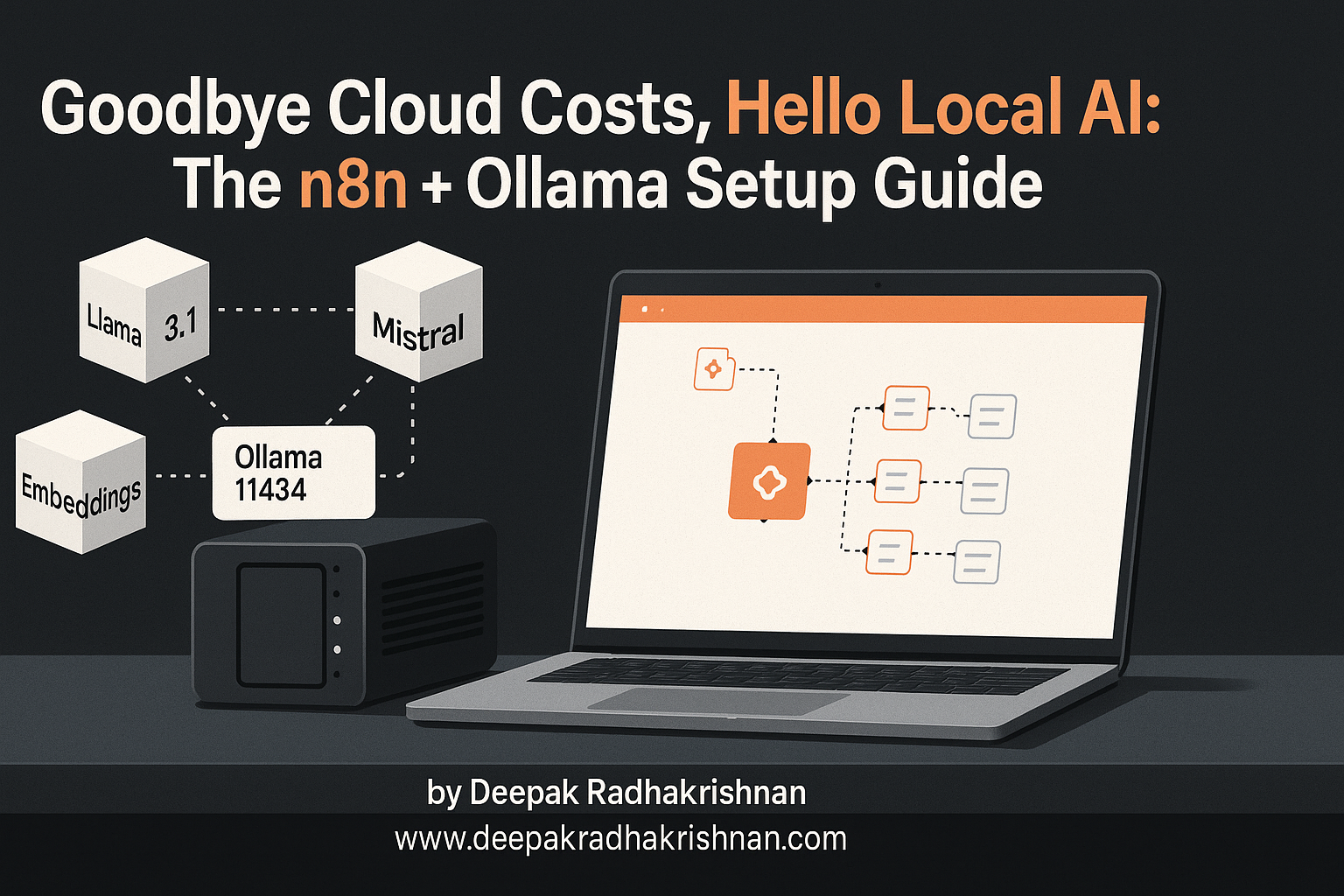

Pay-per-token bills got you flinching every time a prompt runs? You’re not alone. The combo of n8n for automation and Ollama for on-device LLMs gives you private, fast, and (after hardware) essentially zero-marginal-cost AI. In this guide, you’ll spin up Ollama locally, wire it into n8n’s AI nodes, and build a practical workflow (chat + RAG) that never leaves your machine. We’ll cover models that work well today, an OpenAI-compatible route (handy for existing n8n nodes), embeddings, and common gotchas like ports, model names, and token limits. By the end, you’ll have a share-worthy local AI stack that feels cloud-grade without the cloud tax. (Ollama’s OpenAI-compatible API is officially “experimental,” but it works great for most n8n use cases.) (Ollama Documentation)

Why n8n + Ollama Right Now

Running LLMs locally brings three big wins:

- Cost control: No per-token fees. Your GPU/CPU does the work.

- Privacy: Data never leaves your box—huge for sensitive automations.

- Velocity: n8n’s AI nodes + Ollama’s local models let you prototype fast, then scale carefully.

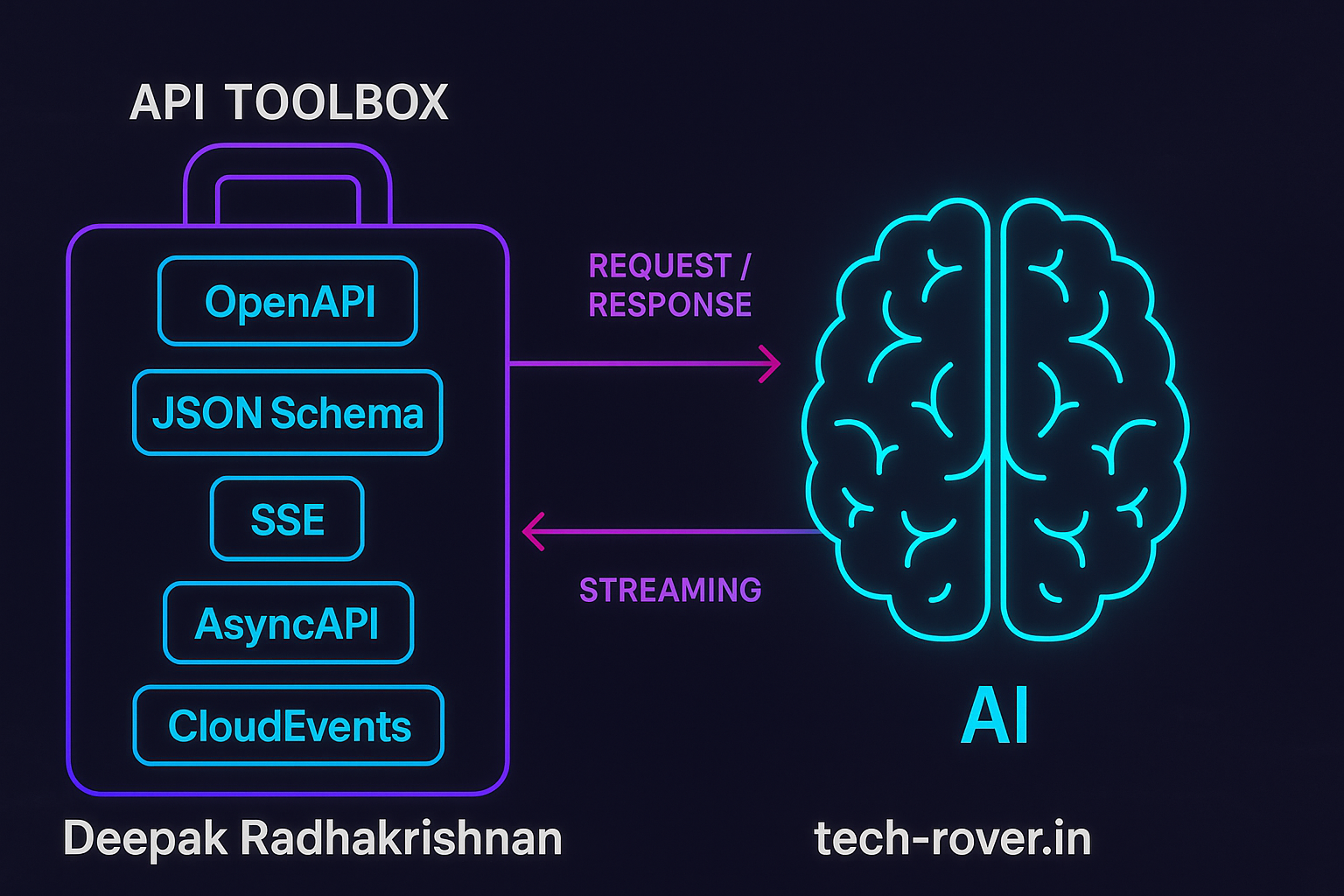

Ollama ships an OpenAI-compatible endpoint (/v1/*) so tools expecting OpenAI can talk to your local model. This is perfect for mixing n8n’s OpenAI-style nodes with your local backend. Just remember it’s experimental and occasionally changes; if you need every feature, Ollama’s native REST API is the source of truth. (Ollama Documentation)

What You’ll Build

- Local LLM chat inside n8n using Ollama (e.g., Llama 3.1 or Mistral).

- Local RAG using n8n’s Embeddings Ollama node to vectorize your files and answer questions with citations. (n8n Docs)

We’ll show both:

- OpenAI-compatible path (point n8n’s OpenAI nodes to Ollama), and

- Native Ollama nodes (Embeddings + Model).

Prerequisites

- A machine with recent CPU; for best results, an NVIDIA GPU with recent drivers.

- Docker (recommended) or native installs.

- n8n 1.x+ and Ollama latest.

Step 1 — Run Ollama Locally

Docker (recommended):

docker run -d --name ollama \

-p 11434:11434 \

-v ollama:/root/.ollama \

--gpus all \

ollama/ollama

Then pull a model:

# Examples: Llama 3.1 8B, Mistral 7B

ollama pull llama3.1

ollama pull mistral

List installed models:

ollama list

The default API listens on http://localhost:11434. Ollama also exposes OpenAI-compatible endpoints under /v1. (GitHub)

Quick API smoke test (chat completions):

curl http://localhost:11434/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "llama3.1",

"messages": [{"role":"user","content":"Say hello from local AI"}]

}'

OpenAI-compat is experimental; if you hit quirks, prefer Ollama’s native API. (Ollama Documentation)

Step 2 — Run n8n

Docker:

docker run -d --name n8n \

-p 5678:5678 \

-v n8n_data:/home/node/.n8n \

--restart unless-stopped \

n8nio/n8n

Open http://localhost:5678 to access the UI.

Step 3 — Connect n8n to Ollama (Two Paths)

Path A: Use n8n’s OpenAI-Style Nodes with Ollama (OpenAI-Compatible)

This is handy when you already built workflows around OpenAI nodes.

- In n8n → Credentials → OpenAI, set:

- Base URL:

http://localhost:11434/v1 - API Key: any non-empty string (Ollama ignores it)

- Base URL:

- In your OpenAI Chat or OpenAI nodes, set Model to the exact name you pulled (e.g.,

llama3.1,mistral).

This works because Ollama speaks a subset of OpenAI’s API. Just note some params differ (e.g., legacy /v1/completions vs chat), and not all features are 1:1. If a node expects OpenAI-only features (like Assistants), use native Ollama nodes instead. (Ollama)

Common issue: “Can’t connect to OpenAI with custom base URL.”

Solution: ensure base is /v1, model name matches, and the Ollama server is reachable at localhost:11434. Community threads confirm the pattern. (n8n Community)

Path B: Use n8n’s Native Ollama Nodes

n8n ships dedicated Ollama AI nodes (and an Embeddings Ollama node) so you can stay native and avoid OpenAI-compat edge cases.

- Embeddings Ollama → generate vectors locally.

- Ollama Model (message/completion sub-nodes) → run prompts against local models.

Docs & integration pages outline params and model names. (n8n Docs)

Step 4 — Build a Private Chatbot in n8n (Local LLM)

Minimal flow:

- Webhook (GET/POST) receives

prompt. - Ollama Model (Message) node:

- Model:

llama3.1(or your pick) - System Prompt: “You are a helpful assistant. Keep replies concise.”

- User Message:

{{$json.prompt}}

- Model:

- Respond to Webhook with the model’s text.

OpenAI-compatible variant: replace step 2 with OpenAI Chat node pointing at Ollama’s /v1 as above. (Ollama)

Step 5 — Local RAG with Embeddings Ollama

- Read Binary Files (PDFs, docs).

- Split Text (n8n text ops, eg 1–2k chars with overlap).

- Embeddings Ollama node:

- Model: embeddings-capable variant (e.g.,

mxbai-embed-largeor other embeddings models available via Ollama)

- Model: embeddings-capable variant (e.g.,

- Store vectors in your DB or a lightweight vector store (SQLite table with cosine distance, or a plugin you prefer).

- Retriever step → Top-k chunks.

- Ollama Model (Message) with a prompt that includes retrieved context (“Answer using only the CONTEXT…”).

n8n’s docs cover the Embeddings Ollama node and how sub-nodes handle parameters across items. (n8n Docs)

Recommended Local Models (2025 Snapshot)

- General chat: Llama 3.1 8B/70B (choose to fit your VRAM), Mistral 7B Instruct for speed.

- Coding: Code Llama or newer code-tuned variants.

- Embeddings: mxbai family or text-embedding models available via Ollama library.

Check the Ollama Library for current tags and sizes; pull what fits your hardware. (Ollama)

Pro tip: Start with 7–9B models for responsiveness, then graduate to bigger models if quality demands it.

Advanced Techniques

Function Calling & Tools

Recent community tests highlight strong tool-use with modern models (e.g., Llama 3.1, Mistral). When building tool-use flows in n8n, keep tool schemas compact and validate model outputs. (Model quality varies—benchmark in your own setup.) (Collabnix)

Streaming & Latency

n8n nodes that support streaming pair nicely with Ollama’s token streaming for snappy UX. If your flow components don’t stream, buffer chunks with an n8n Function node.

Multi-model Routing

Use a small model for quick classification, then route “hard” queries to a bigger local model. n8n’s branching makes this trivial.

Common Pitfalls & Fixes

- Port/Network: Ollama must be reachable at

http://localhost:11434. If you’re running n8n in Docker on another container, put both on the same Docker network and use the container name (http://ollama:11434). (n8n Community) - Model names: Use the exact name from

ollama list(e.g.,llama3.1). Mismatches cause 404s. (GitHub) - OpenAI-compat quirks: Some params/endpoints differ (and may change). If a node fails oddly, try the native Ollama nodes. (Ollama Documentation)

- Context window: Smaller models have smaller windows. Chunk and retrieve; don’t paste books into a single prompt.

- GPU memory: Bigger models need more VRAM. Start with 7B if you’re unsure. Ollama Library lists sizes. (Ollama)

Practical Example: n8n RAG Workflow (JSON Import)

Paste into n8n → Import from URL/Clipboard, then set your own file paths and DB step.

{

"nodes": [

{

"id": "webhook",

"name": "Ask (Webhook)",

"type": "n8n-nodes-base.webhook",

"parameters": { "httpMethod": "POST", "path": "ask-local" },

"typeVersion": 1,

"position": [260, 240]

},

{

"id": "split",

"name": "Split to Chunks",

"type": "n8n-nodes-base.function",

"parameters": {

"functionCode": "const text = items[0].json.text || '';\nconst size = 1500, overlap = 200;\nconst chunks = [];\nfor (let i=0;i<text.length;i+= (size-overlap)) {\n chunks.push({ chunk: text.slice(i, i+size) });\n}\nreturn chunks.map(c => ({ json: c }));"

},

"typeVersion": 2,

"position": [620, 240]

},

{

"id": "embed",

"name": "Embeddings (Ollama)",

"type": "n8n-nodes-langchain.embeddingsOllama",

"parameters": {

"model": "mxbai-embed-large",

"text": "={{$json.chunk}}"

},

"typeVersion": 1,

"position": [880, 240],

"credentials": { "ollamaApi": "Ollama Local" }

},

{

"id": "retrieve",

"name": "Top-k Retrieve",

"type": "n8n-nodes-base.function",

"parameters": {

"functionCode": "// TODO: Replace with your vector DB lookup.\n// This stub just echoes the first few chunks for demo.\nreturn items.slice(0,3);"

},

"typeVersion": 2,

"position": [1140, 240]

},

{

"id": "llm",

"name": "Answer (Ollama Model)",

"type": "n8n-nodes-langchain.llmMessageOllama",

"parameters": {

"model": "llama3.1",

"systemMessage": "You are a concise expert. Cite facts from CONTEXT.",

"userMessage": "Question: {{$json.question}}\\n\\nCONTEXT: {{ $items(\"retrieve\").map(i => i.json.chunk).join(\"\\n---\\n\") }}"

},

"typeVersion": 1,

"position": [1400, 240],

"credentials": { "ollamaApi": "Ollama Local" }

},

{

"id": "respond",

"name": "Respond",

"type": "n8n-nodes-base.respondToWebhook",

"parameters": { "responseBody": "={{$json.text || $json}}"},

"typeVersion": 1,

"position": [1660, 240]

}

],

"connections": {

"Ask (Webhook)": { "main": [[{ "node": "Split to Chunks", "type": "main", "index": 0 }]] },

"Split to Chunks": { "main": [[{ "node": "Embeddings (Ollama)", "type": "main", "index": 0 }]] },

"Embeddings (Ollama)": { "main": [[{ "node": "Top-k Retrieve", "type": "main", "index": 0 }]] },

"Top-k Retrieve": { "main": [[{ "node": "Answer (Ollama Model)", "type": "main", "index": 0 }]] },

"Answer (Ollama Model)": { "main": [[{ "node": "Respond", "type": "main", "index": 0 }]] }

}

}

Swap the “Top-k Retrieve” stub for your vector DB (Postgres+pgvector, SQLite+cosine, etc.). The important part is Embeddings Ollama → store vectors → Answer (Ollama Model) with retrieved context. (n8n Docs)

Troubleshooting FAQ

The OpenAI node won’t connect to my local endpoint.

Confirm Base URL = http://localhost:11434/v1 and that your model name exists in ollama list. If n8n runs in Docker, use the container hostname (http://ollama:11434). (GitHub)

Responses differ from OpenAI.

That’s normal—models differ. Choose the best local model for your task and tune prompts. (n8n Community)

Which models are current “safe bets”?

Llama 3.1 (8B/70B), Mistral 7B for speed, code-tuned variants for dev tasks; check Ollama Library for fresh tags. (Ollama)

Conclusion

Local AI is no longer a hack—it’s a strategy. With n8n as your automation brain and Ollama as your on-device LLM engine, you get privacy, speed, and freedom from cloud bills.

Key takeaways:

- Point n8n’s OpenAI nodes at

http://localhost:11434/v1or use native Ollama nodes. (Ollama) - Use Embeddings Ollama for private RAG. (n8n Docs)

- Start with smaller models; scale up as needed. (Ollama)

Next steps: wire this into a real workflow—support inbox triage, on-prem knowledge search, or code review summaries—then benchmark models and iterate.

What will you build first with your new local AI stack?

Sources & Further Reading:

- Ollama OpenAI-compatible API overview and blog. (Ollama Documentation)

- n8n Embeddings Ollama node docs & integration page. (n8n Docs)

- Ollama Library (models & tags) and GitHub. (Ollama)

- n8n OpenAI node docs and community threads on OpenAI-compatible setups. (n8n Docs)

Leave a Reply