Introduction

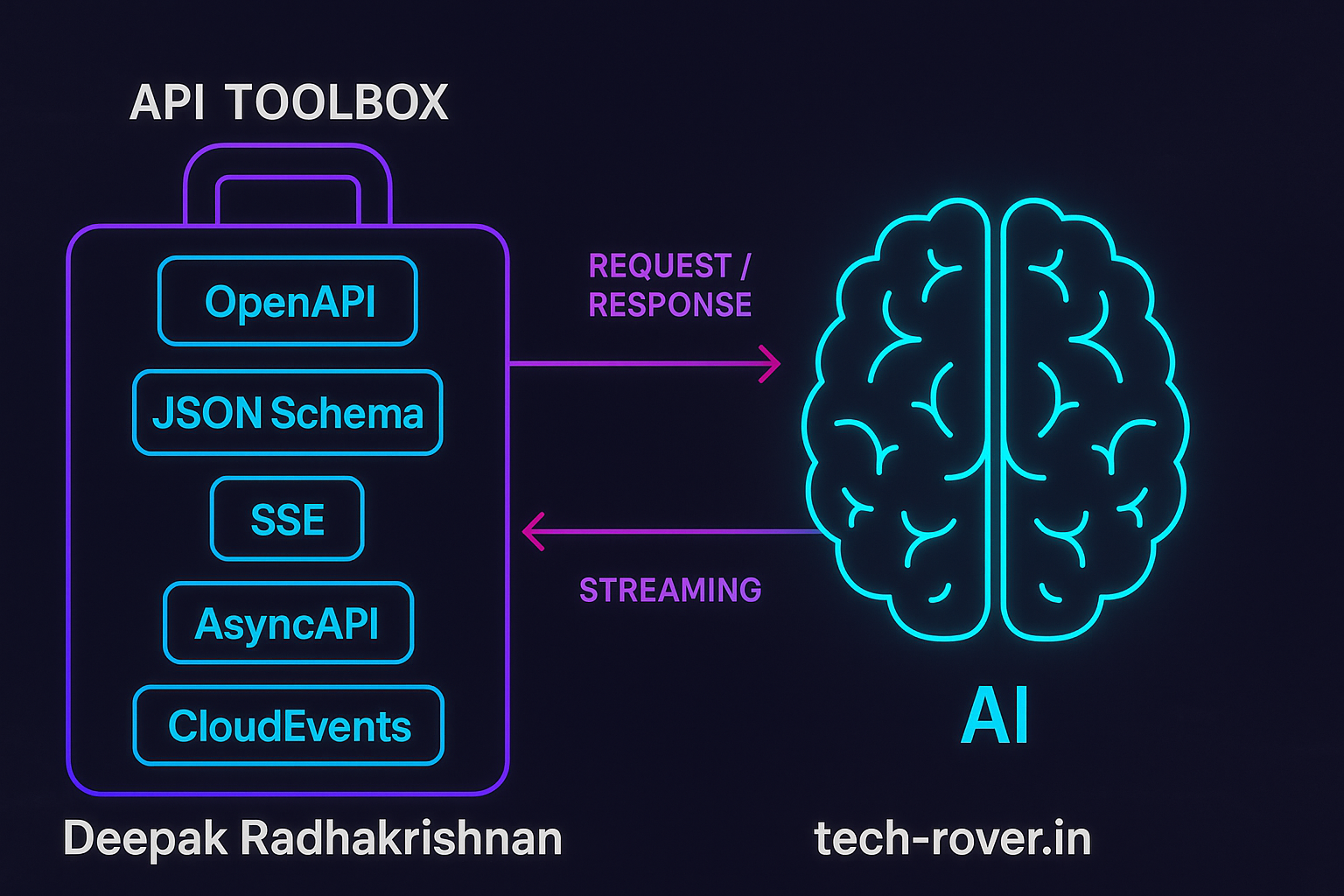

APIs weren’t originally built with large language models in mind. But today, your API is increasingly being called by agents, copilots, and orchestration frameworks—not just human developers. That means different ergonomics: strict, machine-verifiable schemas; predictable errors; streaming; low latency; and event-driven hooks. In this guide, I’ll show you how to build AI-ready APIs that models can call reliably, with concrete patterns you can copy-paste. We’ll anchor on OpenAPI 3.1 + JSON Schema for contracts, problem+json for errors, SSE for streaming, and AsyncAPI/CloudEvents for events, plus tool specs for structured outputs. I’ll also point to current docs so you’re not building on stale assumptions. (swagger.io)

What makes an API “AI-ready” (right now)

AI agents prefer APIs that are:

- Self-describing with machine-verifiable contracts (OpenAPI 3.1 + JSON Schema 2020-12). (swagger.io)

- Deterministic in shape and behavior (stable fields, idempotency, versioning).

- Stream-capable (SSE/EventSource or WebSocket) for progressive results and token-by-token UX. (MDN Web Docs)

- Consistent on errors (RFC 7807 / RFC 9457 problem details). (IETF Datatracker)

- Event-driven where appropriate (AsyncAPI + CloudEvents). (asyncapi.com)

- Structured-output friendly so LLM tools can parse exactly (JSON Schema–backed outputs). (OpenAI Platform)

There’s also a fast-moving ecosystem around “LLM-ready” integration patterns; see recent industry write-ups for design angles and pitfalls. (gravitee.io)

Specify your contract with OpenAPI 3.1 + JSON Schema

OpenAPI 3.1 is natively aligned to JSON Schema 2020-12, which is exactly what modern structured-output features expect. That lets you use one schema for docs, validation, SDKs, and model outputs. (swagger.io)

Minimal 3.1 spec (with problem details)

openapi: 3.1.0

info:

title: Product Search API

version: 1.0.0

servers:

- url: https://api.example.com

paths:

/v1/products/search:

get:

summary: Search products by query and facets

parameters:

- in: query

name: q

required: true

schema: { type: string, minLength: 1 }

- in: query

name: cursor

schema: { type: string, description: Opaque pagination cursor }

responses:

'200':

description: OK

content:

application/json:

schema:

$ref: '#/components/schemas/ProductSearchResult'

'4XX':

description: Client error (RFC 7807)

content:

application/problem+json:

schema:

$ref: '#/components/schemas/Problem'

components:

schemas:

Money:

type: object

required: [currency, amount]

properties:

currency: { type: string, pattern: '^[A-Z]{3}$' }

amount: { type: number }

Product:

type: object

required: [id, title, price]

properties:

id: { type: string }

title: { type: string }

price: { $ref: '#/components/schemas/Money' }

ProductSearchResult:

type: object

required: [items, nextCursor]

properties:

items: { type: array, items: { $ref: '#/components/schemas/Product' } }

nextCursor: { type: [string, "null"] }

Problem: # RFC 7807 / 9457 base

type: object

properties:

type: { type: string, format: uri }

title: { type: string }

status: { type: integer }

detail: { type: string }

instance: { type: string }

This gives agents predictable shapes and standard errors they can branch on. (swagger.io)

Stream results with Server-Sent Events (SSE)

Models and agentic UIs often want partial results. SSE is a simple, HTTP-friendly push channel that most clients can consume with EventSource. (MDN Web Docs)

Node (Express) SSE endpoint

import express from "express";

const app = express();

app.get("/v1/search/stream", (req, res) => {

res.setHeader("Content-Type", "text/event-stream");

res.setHeader("Cache-Control", "no-cache");

res.setHeader("Connection", "keep-alive");

const q = String(req.query.q ?? "");

if (!q) {

res.status(400).end(); return;

}

// Emit chunks as they arrive from your search/backends

const send = (event: string, data: unknown) => {

res.write(`event: ${event}\n`);

res.write(`data: ${JSON.stringify(data)}\n\n`);

};

send("meta", { q, startedAt: Date.now() });

// Simulate chunks

const timer = setInterval(() => {

const item = { id: crypto.randomUUID(), title: `Result for ${q}` };

send("item", item);

}, 250);

// Complete after 10 items

setTimeout(() => {

clearInterval(timer);

send("done", { count: 10 });

res.end();

}, 3000);

});

app.listen(3000);

Browser consumer

<script>

const es = new EventSource("/v1/search/stream?q=laptops");

es.addEventListener("item", e => {

const item = JSON.parse(e.data);

// Append to DOM or stream to an LLM tool

});

es.addEventListener("done", () => es.close());

</script>

Make outputs “tool-callable” with JSON Schema

Modern LLM platforms can guarantee JSON that conforms to your schema—perfect for tool calls. Define the same schema you expose in OpenAPI, then reference it when asking the model to call your API. (OpenAI Platform)

Example: tool schema for “searchProducts”

{

"name": "searchProducts",

"description": "Search the catalog and return paginated results.",

"parameters": {

"type": "object",

"properties": {

"q": { "type": "string", "minLength": 1 },

"cursor": { "type": "string" }

},

"required": ["q"],

"additionalProperties": false

}

}

When the model returns arguments that match this schema, your orchestrator can directly call /v1/products/search and trust the shapes.

Errors that agents can reason about (problem+json)

Return application/problem+json for failures. Models can branch on type, status, and title consistently across endpoints.

{

"type": "https://api.example.com/errors/invalid-parameter",

"title": "Invalid parameter: q",

"status": 400,

"detail": "Parameter 'q' must be at least 1 character.",

"instance": "urn:trace:3c2b..."

}

This follows RFC 7807 / RFC 9457 and avoids bespoke error formats. (IETF Datatracker)

Pagination, idempotency, caching, and versioning

Small operational details make a huge difference to AI orchestrators.

Pagination: Prefer cursor-based (nextCursor) over page numbers to avoid duplicates and race conditions.

Idempotency: Accept an Idempotency-Key header on mutating endpoints and reuse results for retries.

Caching: Support ETag/If-None-Match for GETs; many agent loops hammer the same resources.

Versioning: Use a header or URL prefix (/v1) and keep breaking changes behind new versions.

(These patterns reflect modern REST guidance from industry-standard playbooks.) (Postman)

Example: conditional GET with ETag

GET /v1/products/123 HTTP/1.1

If-None-Match: "W/\"27b9-abc123\""

HTTP/1.1 304 Not Modified

ETag: W/"27b9-abc123"

Example: idempotent POST

POST /v1/orders HTTP/1.1

Idempotency-Key: d3f3a5b8-...

Content-Type: application/json

{ "items": [{"sku":"ABC-123","qty":1}] }

Events your agents can subscribe to (AsyncAPI + CloudEvents)

When an LLM initiates a job, it’s nice to notify when the downstream workflow completes. Describe your channels with AsyncAPI, and put event payloads in CloudEvents format so they’re consistent. (asyncapi.com)

Tiny AsyncAPI snippet with CloudEvents data

asyncapi: 3.0.0

info: { title: Catalog Events, version: 1.0.0 }

channels:

product/updated:

address: product.updated

messages:

productEvent:

$ref: '#/components/messages/cloudEvent'

components:

messages:

cloudEvent:

name: CloudEvent

payload:

type: object # CloudEvents structured content

required: [specversion, id, source, type, time, data]

properties:

specversion: { type: string, const: "1.0" }

id: { type: string }

source: { type: string, format: uri }

type: { type: string, const: "com.example.product.updated" }

time: { type: string, format: date-time }

data:

$ref: '#/components/schemas/Product'

schemas:

Product:

type: object

required: [id, title]

properties:

id: { type: string }

title: { type: string }

Practical end-to-end example (FastAPI)

Spin up one service that supports OpenAPI 3.1 contracts, SSE streaming, problem+json errors, cursor pagination, and idempotent POST.

# uvicorn app:app --reload

from fastapi import FastAPI, Request, Header, HTTPException

from fastapi.responses import JSONResponse, StreamingResponse

from pydantic import BaseModel

import asyncio, json, hashlib

app = FastAPI(

title="AI-Ready Catalog",

version="1.0.0",

# FastAPI exports OpenAPI; ensure your generator supports OAS 3.1 for JSON Schema 2020-12

)

# Schemas

class Money(BaseModel):

currency: str

amount: float

class Product(BaseModel):

id: str

title: str

price: Money

class SearchResult(BaseModel):

items: list[Product]

nextCursor: str | None = None

# In-memory demo

DATA = [Product(id=f"p{i}", title=f"Laptop {i}", price=Money(currency="USD", amount=999+i)) for i in range(10)]

IDEMPOTENCY = {}

@app.get("/v1/products/search", response_model=SearchResult)

async def search(q: str, cursor: str | None = None):

if not q:

return problem(400, "invalid-parameter", "Parameter 'q' must be at least 1 character.")

start = int(cursor or 0)

items = [p for p in DATA if q.lower() in p.title.lower()][start:start+3]

next_cursor = None if start+3 >= len(DATA) else str(start+3)

return SearchResult(items=items, nextCursor=next_cursor)

@app.get("/v1/search/stream")

async def stream(q: str):

if not q:

return problem(400, "invalid-parameter", "Parameter 'q' must be at least 1 character.")

async def gen():

yield "event: meta\ndata: {}\n\n"

for p in [p for p in DATA if q.lower() in p.title.lower()]:

await asyncio.sleep(0.2)

yield f"event: item\ndata: {p.json()}\n\n"

yield "event: done\ndata: {}\n\n"

return StreamingResponse(gen(), media_type="text/event-stream")

@app.post("/v1/orders")

async def create_order(payload: dict, Idempotency_Key: str | None = Header(default=None)):

key = Idempotency_Key or hashlib.sha256(json.dumps(payload).encode()).hexdigest()

if key in IDEMPOTENCY:

return IDEMPOTENCY[key] # same result as first call

# pretend to create an order

result = JSONResponse({"orderId": "ord_123", "status": "created"})

IDEMPOTENCY[key] = result

return result

def problem(status: int, type_slug: str, detail: str):

return JSONResponse(

status_code=status,

content={

"type": f"https://api.example.com/errors/{type_slug}",

"title": "Invalid parameter",

"status": status,

"detail": detail

},

media_type="application/problem+json"

)

This covers the core interaction patterns your AI consumer expects. For production, add ETag support, auth, observability, and strict JSON Schema validation.

Advanced techniques & ecosystem notes

- Model Context Protocol (MCP): an emerging standard that lets tools expose capabilities to many LLMs via a common protocol—useful if you operate many microservices/tools. Consider publishing your API as an MCP server alongside your HTTP contract. (IT Pro)

- Latency budgets: Agents chain calls; every 200–300 ms saved compounds. Prefer colocated compute + DB, gzip/br, HTTP/2, and streaming first tokens.

- Safety & guardrails: Validate inputs server-side (don’t trust model arguments), cap list sizes, and sanitize text fields.

- Observability: Correlate each request with a trace ID and log prompt-aware context (which tool/schema version, which agent).

- Change management: Ship additive changes first; announce breaking changes behind

/v2with side-by-side “dual-read” periods.

Common pitfalls (and how to avoid them)

- Free-form responses: If your endpoint returns prose mixed with JSON, LLMs will struggle. Return only JSON (or only SSE events).

- Branching in prompts: Push conditionals/loops into code, not prompts; keep the tool spec simple and deterministic. (Medium)

- Non-standard errors: Use problem+json so agents can branch on

status/typereliably. (IETF Datatracker) - Page-number pagination: Switch to cursors to avoid duplication and race conditions.

- Opaque docs: Publish your OpenAPI 3.1 and AsyncAPI specs; keep them in CI so they never drift. (swagger.io)

Conclusion

If you do nothing else, do these five things:

- Publish OpenAPI 3.1 with JSON Schema.

- Return problem+json errors.

- Add SSE endpoints for long-running or progressive operations.

- Support cursor pagination, idempotency keys, and ETag.

- Describe events with AsyncAPI and CloudEvents.

These changes make your API dramatically easier for agents and humans alike. Want a quick win? Start by upgrading your contract to OpenAPI 3.1 and wiring up SSE on the slowest endpoints—then iterate.

Leave a Reply